Researchers said their 'unbiased' facial recognition could identify potential future criminals — then deleted the announcement after backlash

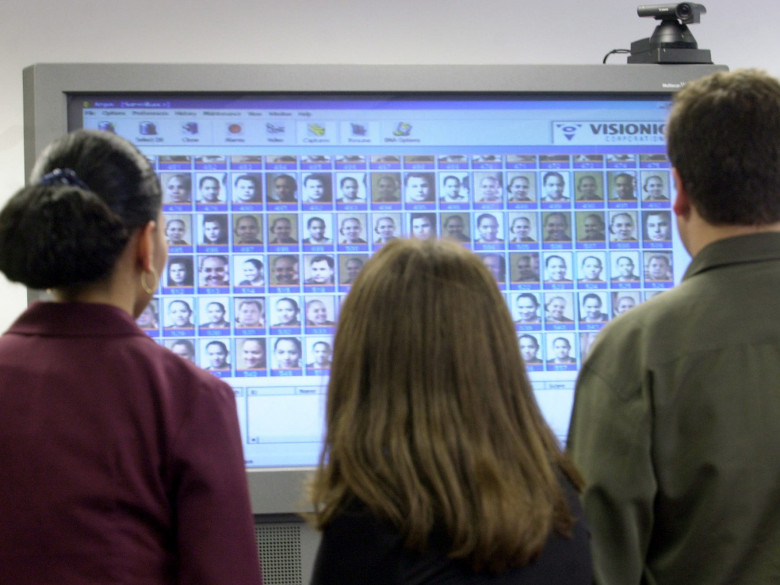

A team of researchers made waves this week with a bold, as-of-yet unsubstantiated claim: They built software, they said, that can predict whether someone is a criminal based on a picture of their face.

In a now deleted press release, Harrisburg University announced that the technology is "capable of predicting whether someone is likely going to be a criminal." The release said the software was built by professors Nathaniel Ashby and Roozbeh Sadeghian alongside NYPD veteran Jonathan Korn, a Ph.D. student.

In the 24 hours following its publication, the release was met with swift backlash from academics, data analysts, and civil liberties advocates, who said the Harrisburg University researchers' claims were unrealistic and irresponsible. Critics also highlighted the supposed technology's potential for bias, especially given that existing facial recognition software has been found to misidentify people of color as much as 100 times more than white people.